|

Lecture 4: Introduction to Programming

|

|

Any program is nothing more than a set of instructions for the computer.

The computer will execute the command one after the other, in principle

in the order as they are written (apart from so-called branching instructions

(if, if..else, switch) that we will see later). It will do nothing more

and nothing less than what we tell it to do.

Moreover, we have to give (write) the instruction with a lot of care.

The computer understands only the thing we taught it to understand

Software engineering

Creating programs always consists of the following steps:

-

Think! Study and analyze the problem. Collect information. Come up with

a possible solution. Don't touch that keyboard yet! Use pen and paper.

-

Write a program. Use whatever editor to enter your program

-

Eliminate the errors from the program. This is called "debugging". There

are several types of errors:

-

compile-time errors. Writing errors (For example when we write prinntf

instead of printf). Easy to elliminate; the compiler is going to help us

by telling us something like "type-mismatch error in line 34"

-

programmatic errors. For example forgetting to initialize (setting to a

certain value) our variables. This can also cause so-called "run-time errors",

for example "division by zero error".

-

logical errors (for example, we do not know that the Pythagoras rule is

a2 + b2 = c2). The program will run without

generating errors, but the result will not be what we wanted.

-

Analyze the result. Is this what you wanted? Maybe the program wrote "The

square-root of 9 is 4" Clearly not what we wanted.

-

If necessary, go to step 3, 2 or even 1.

Spending some more time on point 1 can often save a lot of time in the

other steps. |

|

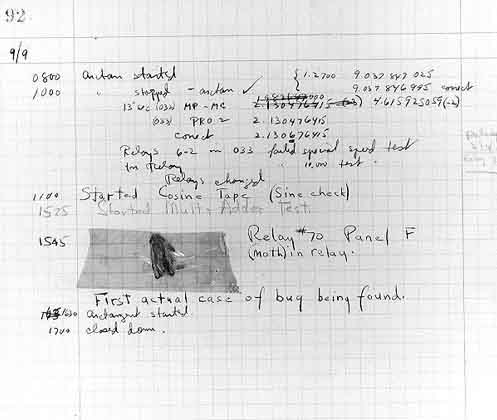

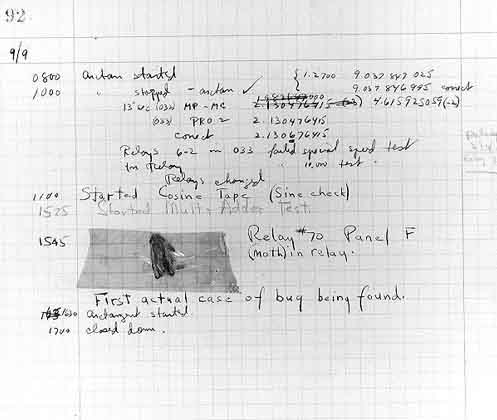

The First Computer Bug

Grace Murray Hopper, working in a temporary World War I building

at Harvard University on the Mark II computer, found the first computer

bug beaten to death in the jaws of a relay. She glued it into the logbook

of the computer and thereafter when the machine stops (frequently) they

tell Howard Aiken that they are "debugging" the computer. The very first

bug still exists in the National Museum of American History of the Smithsonian

Institution. Edison had used the word bug and the concept of debugging

previously but this was probably the first verification that the concept

applied to computers. (copied from http://www./firstcomputerbug.html) |

The C programming language

C was invented in Bell Labs in 1971-1973. It was an evolution of

the language B, which in turn was based on BCPL. In 1983 the language was

standardized and that became the official version. It is probably the most

used programming language in the world.

The evolution of C went hand-in-hand with the evolution of the UNIX

operating system which we are going to use in our lectures (in the form

of Linux, which is a graphical variant of UNIX). In fact, UNIX itself was

written in C.

A program is a sequence of instructions, or statements which

inform the computer of a specific task we want it to do.

Most modern program languages are in a very readible format, close

to English, making it easy for humans to read and write programs. This

in contrast to earlier programming languages, which were closer to things

the computer understand. See for example the assembler language (aula

2).

A very simple C program:

#include <stdio.h>

main()

{

printf("Hello World\n");

}

Let's take a look at this program.

-

Every line that starts with a hash # are instructions for the compiler

or linker rather than command that will be executed at run-time. #include

<stdio.h> means that the library stdio ("standard input-output") has

to be fetched by the compiler in order to understand what is coming. We

will use the command printf which is part of the stdio library.

-

After this we can write our own procedures and functions (see the lecture

on modular programming).

-

"main" is a special function. The first instruction of this function is

always the first instruction to be executed. For the first couple of weeks

we will only write instructions in this function.

-

Therefore, the instruction printf("HellowWorld\n") is executed

first and since it is the only instruction this also finishes the program.

The instruction writes the text Hello World on the screen and

goes to the next line (\n).

Reserved keywords in ANSI C

auto

break

case

char

continue

default

do

double

else

extern |

float

for

goto

if

int

long

register

return

short

sizeof() |

static

struct

switch

typedef

union

unsigned

void

while |

Note that there is only one function defined in the C language, namely

sizeof().

All the other functions are described in so-called libraries. For example,

printf can be found in the libary stdio. We therefore have to put the compiler

directive #include <stdio.h> in the beginning of our code.

Identifiers

Identifiers, as the name already says, are used for identifying

things. This can be names of functions and names of variables

and. This we will see in later aulas. Like in most languages, names of

identifiers have some restrictions:

-

They should start with a letter; "20hours" is not allowed.

-

Followed by any combination of letters, digits or the underscore character

"_".

-

Spaces are not allowed, nor are characters like "(", "{", "[", "%", "#",

"?", etc, except "_". The reason why this is so is that these characters

are used for other things in C. They are called reserved characters.

| { } [ ] ( ) - = + / ? < > . , ; : ' " ! @ # $ % ^ & * ~

` \ | |

-

Identifiers cannot be equal to reserved keywords (and also better

to avoid predefined functions) of C, such as words like "int" or "main".

Note that identifiers like "int1", "int_" or "Int" are allowed, although

it is advised to avoid such confusing names. Note: In many programming

environments, we will notice when we are using a reserved keyword because

they will change color when we type them in.

-

Choose your identifiers well. When a variable is used for storing interest

rates, call it, for example "interest" and not "variable1". Although it

is not an error to give it the name "variable1", it is much more intelligent

to give it a more meaningful name. This helps other people to understand

your program (or yourself when you come back to the program after a long

time).

-

The minimum length of identifiers is 1, the maximum length 255. Make use

of this possibility of long names, but also remember that also long names

can make the program unreadable. Choose a "golden middle". Which of the

following do you think is best:

r = r + a;

money = money + interest;

themoneyintheaccountofpersonwithnameJohnson

= themoneyintheaccountofpersonwithnameJohnson + thecurrentinterestrateatthetimeofthiswriting;

-

They are case sensitive, "i" is not equal to "I", etc. To make programs

more readable, follow a convention all through your program(s). The most

often used convention is lowercase for variables and UPPERCASE for CONSTANTS.

Structured programming

The

most important thing in programming is to write clear, logical and structured

programs.

The

most important thing in programming is to write clear, logical and structured

programs.

-

Use meaningful names for variables, procedures and functions.

-

Use indentation. Compare the following two programs:

#include <stdio.h> main() {printf("Hello

world!\n")}

and

#include <stdio.h>

main()

{

printf("Hello world!\n")

}

Both programs do exactly the same, but the second one is much more

readible. The difference is

-

Only put one statement per line.

-

Use indent. Put (2) extra spaces in the beginning of the line every time

we are one level "deeper" in the structures.

-

Seperate blocks of text (functions and procedures) with blank lines.

-

Avoid the use of "goto" statements. With these statements, the program

rapidly starts looking like spaghetti. Whereas in BASIC (Beginner's All-purpose

Symbolic Instruction Code) the use of the GOTO statement is nearly unavoidable,

in any itself-respecting language, the goto statement should be avoided.

-

Comment. Since C is nearly like English, the program itself should

be self-explanatory. Still, in places where the idea of the program might

not be clear to the programmer, use comments. In C comments are placed

after // on a single line, or between /* and */

for multi-line comment.

-

Use functions wherever it makes the text more organized. If at many

different places the program has to do basically the same thing (for instance

reading a line of text form a file), consider putting it in a procedure

or function (for example FileReadLn(). This will make the program more

readable, more efficient and shorter.

main(l

,a,n,d)char**a;{

for(d=atoi(a[1])/10*80-

atoi(a[2])/5-596;n="@NKA\

CLCCGZAAQBEAADAFaISADJABBA^\

SNLGAQABDAXIMBAACTBATAHDBAN\

ZcEMMCCCCAAhEIJFAEAAABAfHJE\

TBdFLDAANEfDNBPHdBcBBBEA_AL\

H E L L O, W O R L D! "

[l++-3];)for(;n-->64;)

putchar(!d+++33^

l&1);} |

The program above shows an example of how NOT to program. Do you manage

to predict what the program does? Don't worry, neither do the specialists.

If you want to know what will be the output of this program, click here.

(program copied from http://www.ioccc.org/)

Quick test:

To test your knowledge of what you have learned in this lesson, click

here for an on-line test. Note that this NOT the form the final

test takes!

Peter Stallinga. Universidade do Algarve, 14 outubro 2002

The

most important thing in programming is to write clear, logical and structured

programs.

The

most important thing in programming is to write clear, logical and structured

programs.